Master AI Ethics for a Responsible Digital Future: Artificial intelligence is transforming the world, but with great power comes great responsibility. Dooey Online presents Responsible Automated Intelligence (AI) Ethics Fundamentals—a comprehensive course designed to equip professionals, policymakers, and AI enthusiasts with the expertise to develop and govern AI ethically and responsibly.

What You’ll Learn

Gain a deep understanding of ethical AI principles, practical strategies, and real-world applications to ensure AI serves humanity fairly and transparently.

- Foundations of AI Ethics – Explore core ethical frameworks that guide responsible AI innovation.

- Developing Responsible AI – Learn techniques to minimize bias, enhance transparency, and uphold accountability in AI systems.

- Privacy & Security in AI – Discover best practices for ethical data management and risk mitigation.

- Social & Ethical Impacts – Analyze AI’s influence on employment, society, and its potential for global good.

- AI Governance & Policy – Learn to craft and implement ethical AI policies that align with industry standards and regulations.

Why Enroll in This Course?

Dooey’s AI ethics course is designed for impact, ensuring you gain the necessary knowledge and skills to foster ethical AI adoption.

- Comprehensive & Up-to-Date Curriculum – Covers fundamental ethics, responsible AI design, and governance strategies.

- Hands-On Learning – Experience practical demonstrations using ethical AI tools, including ChatGPT and Microsoft Responsible AI.

- Industry-Aligned Expertise – Learn from seasoned professionals specializing in AI ethics and governance.

- Real-World Applications – Understand how to implement ethical AI in corporate, governmental, and research settings.

Course Breakdown

- Introduction to AI Ethics – Dive into ethical AI principles, challenges, and global AI ethics standards.

- Responsible AI Development – Address fairness, bias mitigation, and transparency in AI.

- Privacy & Security in AI – Learn ethical data handling and best practices for AI safety.

- Social Impact & Ethical Considerations – Examine AI’s effects on automation, employment, and ethical AI for social good.

- Ethical AI Governance & Policy – Develop strategies for ethical leadership and AI governance.

Who Should Take This Course?

- AI developers and data scientists seeking to integrate ethics into AI design.

- Business leaders and policymakers shaping ethical AI governance.

- Educators, students, and tech enthusiasts eager to understand AI ethics.

Key Benefits

✅ Engaging & Interactive Learning – Whiteboard sessions, case studies, and real-world examples.

✅ Practical Insights – Actionable frameworks for ethical AI implementation.

✅ Flexible, On-Demand Access – Learn at your own pace with online modules designed for busy professionals.

Take the Lead in Ethical AI

Shape the future of responsible AI innovation. Enroll in Responsible Automated Intelligence (AI) Ethics Fundamentals today and become a champion of ethical AI practices.

Course Outline

Introduction – Responsible Automated Intelligence Ethics

Course Welcome

Instructor Introduction

Module 1: Introduction to AI Ethics

1.1 Introduction to AI Ethics

1.2 Understanding AI Ethics

1.3 Ethical Frameworks and Principles in AI

1.4 Ethical Challenges

1.5 Whiteboard – Key Principles of Responsible AI

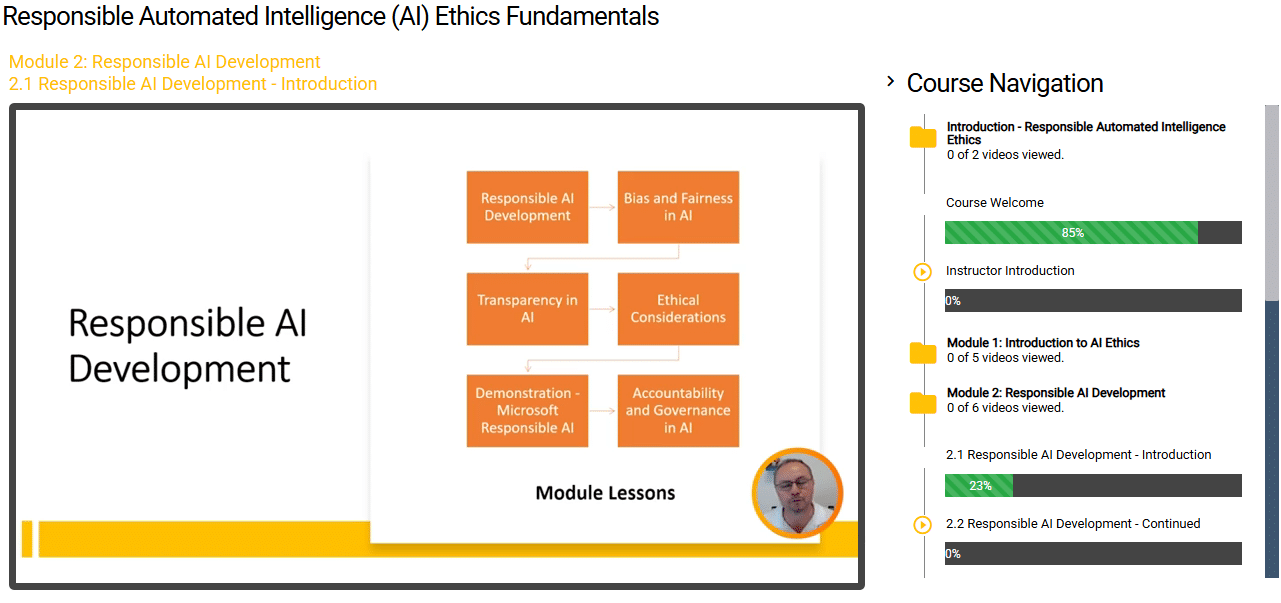

Module 2: Responsible AI Development

2.1 Responsible AI Development – Introduction

2.2 Responsible AI Development – Continued

2.3 Bias and Fairness in AI

2.4 Transparency in AI

2.5 Demonstration – Microsoft Responsible AI

2.6 Accountability and Governance in AI

Module 3: Privacy and Security with AI

3.1 Privacy and Security in AI

3.2 Data Collection and Usage

3.3 Risks and Mitigation Strategies

3.4 Ethical Data Management in AI

3.5 Demonstration – Examples of Privacy EUL

Module 4: Social and Ethical Impacts of AI

4.1 Social and Ethical Impacts of AI

4.2 Automation and Job Displacement

4.3 AI and Social Good

4.4 Demonstration – ChatGPT

4.5 Demonstration – Bard

Module 5: Policy Development

5.1 Policy Development

5.2 Ethical AI Leadership Culture

5.3 Ethical AI Policy Elements

5.4 Ethical AI in a Changing Landscape

5.5 Course Review

5.6 Course Closeout

Frequently Asked Questions Related To Responsible Automated Intelligence (AI) Ethics Fundamentals

AI ethics refers to the study and application of moral principles, guidelines, and best practices that govern the development and use of artificial intelligence. It aims to ensure AI systems operate fairly, transparently, and responsibly while minimizing risks such as bias, discrimination, and privacy violations. AI ethics also considers the broader social impact of AI, promoting technology that benefits individuals and society rather than causing harm.

AI ethics is crucial because AI systems influence many aspects of life, from hiring decisions and medical diagnoses to law enforcement and financial services. Without ethical guidelines, AI can perpetuate biases, invade privacy, and make decisions that lack accountability. Ethical AI development ensures fairness, protects user rights, builds trust, and promotes responsible innovation. By prioritizing ethical considerations, organizations can prevent harm, enhance transparency, and create AI solutions that contribute positively to society.

The core principles of AI ethics serve as a foundation for responsible AI development and use:

Fairness: AI should treat all individuals equally, avoiding bias and discrimination.

Transparency: AI decision-making processes should be understandable and explainable.

Accountability: Developers and organizations should take responsibility for the outcomes of AI systems.

Privacy and Security: AI must protect user data and ensure it is handled securely.

Social Benefit: AI should be designed to contribute positively to society and human well-being.

Reliability and Safety: AI systems must function as intended, minimizing risks and errors.

Bias in AI can be reduced through proactive measures at every stage of development:

Using diverse and representative datasets to train an AI preventing the reinforcement of existing biases.

Conducting regular audits and fairness assessments to identify and address potential biases in algorithms.

Implementing explainable AI (XAI) techniques that allow developers and users to understand AI decision-making.

Establishing ethical guidelines and governance frameworks to guide AI design and deployment.

Engaging interdisciplinary teams that include ethicists, domain experts, and affected communities to identify potential bias early.

Responsible AI development refers to the process of designing, building, and deploying AI systems in a way that aligns with ethical principles and societal values. It involves ensuring that AI operates transparently, fairly, and securely while minimizing risks like discrimination, misinformation, and misuse. Developers must take accountability for their AI models, conduct impact assessments, and implement safeguards to prevent unintended consequences. The goal of responsible AI is to create trustworthy, human-centric technology that enhances lives while respecting legal and ethical standards.

Your Training Instructor

Joe Holbrook

Independent Trainer | Consultant | Author

Joe Holbrook has been in the IT industry since 1993, when he first worked with HPUX systems aboard a U.S. Navy flagship. Over the years, he transitioned from UNIX to Storage Area Networking (SAN), Enterprise Virtualization, and Cloud Architectures, eventually specializing in Blockchain and Cryptocurrency. He has held roles at companies such as HDS, 3PAR, Brocade, HP, EMC, Northrop Grumman, ViON, Ibasis.net, Chematch.com, SAIC, and Siemens Nixdorf.

Currently, Joe serves as a Subject Matter Expert in Enterprise Cloud and Blockchain Technologies. He is the Chief Learning Officer (CLO) of Techcommanders.com, an e-learning and consulting platform. He also holds multiple IT certifications from AWS, GCP, HDS, and other organizations.

A passionate speaker and well-known course author, Joe resides in Jacksonville, Florida.